This is a good place to start. Watch this one minute video.

Imagine if instead of a Morgan Freeman character, it was a famous politician. What if it was someone pretending to be Donald Trump talking about draconian measures in society during a second term as President. Or, suppose it was an image if Dr. Fauci admitting that he was secretly rtesponsible for COVID, or a President Biden ringer “confessing” he was involved in some world wide conspiracy. Would you believe and of these scenarios? How could you know what to believe, what was true and what was not? In a time where through the use of Artificial Intelligence dead actors are being called from the grave, how do we know where reality ends and fiction begins? And using contrived post mortem images or videos of famous people opens the door to legal issues are well.

Another story just came out last week about a deepfake image, this time concerning someone running for public office in Europe.

Meet Juliette de Causans. Juliette is a 40 year old teacher and attorney (who has a doctorate in law from the University of Paris) with a specialty in digital law and communication. She is a candidate for France’s senatorial election this fall. The candidate looked attractive though not stunning perhaps in her earlier campaign photos (see left) as many do. Perhaps the constituents in her district are ordinary, unpretentious citizens and it was important that they could relate to their senator. Using artificial intelligence (AI) programs, however, the computer enhanced her image to make her glamorous, more “Hollywood.” The result is pictured below, taken from her campaign website. Is this the same woman?

When is a fake not a fake?

Many readers who commented on the different websites that covered this story believed that she was deceiving the voters. Personally, I could not understand the criticism. There was nothing in the enhanced photo that could not have been accomplished in a day at a spa with experts in hair styling, perhaps wigs or hairpieces, cosmetics, and so on. To me, this wasn’t much different than a photo of a man after he edited out some gray hair around his temples.

I Googled “deepfake images online” and in seconds almost a dozen AI generated face swap programs came up. Some of the more popular or powerful ones are DeepFaceLab, Anyone Swap, Deepswap, Reface, Face Swap Live and Face Play. Other DITY programs allow you to manipulate selfies and portraits, such as turning a person’s face into a cartoon-like image or morphing a person’s mouth in a video to match the lyrics of a song. Even barnyard animals can be made to look like they are singing along convincingly to “Old MacDonald Had a Farm.”

But a deepfake photo or video can lead people to draw a completely wrong opinion of someone. There were one or more individuals last year who discovered that if you downloaded a video clip of previous Speaker-of-the-House Nancy Pelosi and artificially slowed down her speech, you could make it sound like she had been drinking. This crude approach is called a “shallowface.” People viewing the video who have always and perhaps unfairly despised Pelosi had no problem believing that she was drunk. To them, this video clip supported their confirmation bias. The average person who knew little about media manipulation had no explanation for what he or she saw, except that Representative Pelosi seemed drunk. Her supporters and those members of the press that were there when the video was taken knew better, however, and they refused to “believe their eyes” when the video came out on social media.

There are also convincing photos of former president Donald J. Trump in a jail cell. But these are obviously deepfake photos as well.

What I hope to do with this post is to provide ways of evaluating images to see if they seem genuine or not. If you are the sort of person to accept things at face value, you may not find this to be particularly informative, but I encourage you to read it through nonetheless.

Where does the term “deep fake”come from?

Deepfake videos are named by compounding the word “deep” from deep learning with the word “fake.” Since the invention of photography, photographers have been manipulating their images to reduce glare, highlight certain features while cropping out others. Dodging, burning and so on were done by many photographers at some point if not by all. When digital imaging arrived in the 1990’s, it became much easier and digital imaging opened up other possibilities as well.

National Public Radio (NPR) ran a story on how to uncover deepfake photos. They quoted a researcher named Mike Caufield who shared this SIFT tecghnique. SIFT means Stop; Investigate the source; Find better coverage and Trace the original context. As NPR explains: “The overall idea is to slow down and consider what you’re looking at — especially pictures, posts, or claims that trigger your emotions.“

“A good first step is to look for other coverage of the same topic. If it’s an image or video of an event — say a politician speaking — are there other photos from the same event?

Does the location look accurate? Fake photos of a non-existent explosion at the Pentagon went viral and sparked a brief dip in the stock market. But the building depicted didn’t actually resemble the Pentagon.“

You should also double check whatever your chatbot tells you. For example, ChatGPT is vulnerable to the same errors that you are when something sensational occurs and hundreds of thousands or more people are typing in the same search words.

An example of a deep fake image

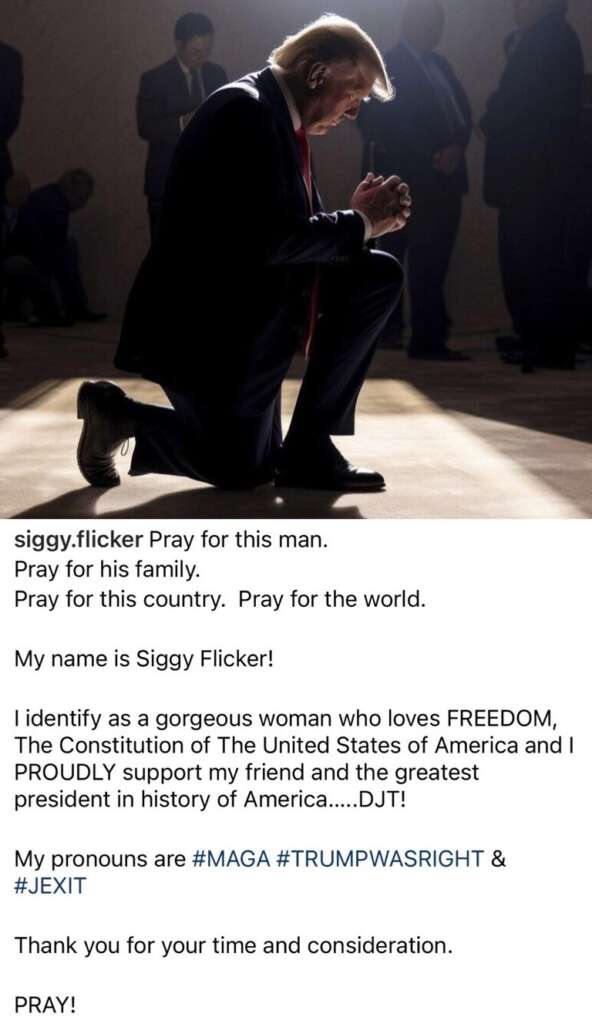

Here (right) is a post with an image from Truth Social. It appears to have originated with someone called Siggy Flicker and was reposted by Donald Trump. It does not seem to be a genuine image.

Euro News has some other clues:

“. . .a closer look at this image shows the AI image generator messed up his hands clasped in prayer. The right one is missing a finger and his thumbs are distorted.

The background is another giveaway: it doesn’t look very realistic for the former president to be in a room kneeling with a spotlight on him with no one around looking at him.“

Of course, some followers might say it was a staged photo of the previous President, but then, would he go through the trouble to do this when he is leading in the polls?

Here is another deepfake image from Twitter (now called X.) It is the well known image of Pope Francis in a white quilter coat. Euro News notes that

“In the AI-generated image of the pope wearing a white puffy jacket, his glasses are deformed and don’t seem to fit right. Also, a quick look at his right hand reveals that the water bottle he’s holding has a strange shape that makes it look like it has melted.

The darkside of deepfake

Much of Deepfake photos and video deals with pornography.

This is from a story in the Telegraph dated September 20, 2023. It shows how evil minds can manipulate technology to libel people, even minor children:

“Police in a Spanish town are investigating after dozens of schoolgirls reported that AI-generated images showing them posing naked were being shared around schools.

The ages of the victims known so far range from 11 to 17. The police have reportedly identified seven suspects involved in the creation and distribution of the images, known as deepfakes, which are generated with apps that use machine learning to combine a photograph of the victim’s face with pornographic imagery from the internet.

Miriam Al Adib, the mother of one of the victims, said that her 14-year-old daughter showed her a photo of herself apparently naked.

‘If I didn’t know my daughter’s body, this photo looks real,’ said Ms Al Adib, who took to her Instagram account to warn others of the situation and urge them to report it[1].”

Deepfake video

Deepfake video is another challenge. According to The Guardian newspaper:

“In 2018, US researchers discovered that deepfake faces don’t blink normally. No surprise there: the majority of images show people with their eyes open, so the algorithms never really learn about blinking. At first, it seemed like a silver bullet for the detection problem. But no sooner had the research been published, than deepfakes appeared with blinking. Such is the nature of the game: as soon as a weakness is revealed, it is fixed.

Poor-quality deepfakes are easier to spot. The lip synching might be bad, or the skin tone patchy. There can be flickering around the edges of transposed faces. And fine details, such as hair, are particularly hard for deepfakes to render well, especially where strands are visible on the fringe. Badly rendered jewellery and teeth can also be a giveaway, as can strange lighting effects, such as inconsistent illumination and reflections on the iris.“

The pupils in the eyes are worth looking at carefully in still photos as well. The reflections in the two pupils should look identical and appear to be coming from the same light source.

Today, criminals are using deepfake live images to authenticate another person that they are impersonating. If you are on Zoom, Vimeo, GoToMeeting, etc. and you suspect you may be talking to a deepfake re-creation, ask the “person” you are speaking with to turn their head sideways. This is because “deepfake AI models, while good at recreating front-on views of a person’s face, aren’t good at doing side-on or profile views like the ones you might see in a mug shot.”

Deepfake images and videos and the law

U.S. law tends to be a day late and a dollar short when it comes to technology, such as people stealing wi-fi signals and so on. However, U.S. Congressman Joe Morelle (D, NY) has authored the “Preventing Deepfakes of Intimate Images Act” and you can read about it here.

Footnotes

[1] In fact, “the AI firm Deeptrace found 15,000 deepfake videos online in September 2019, a near doubling over nine months. A staggering 96% were pornographic and 99% of those mapped faces from female celebrities on to porn stars” according to The Guardian.